Runtime: The Foundation of Next-Generation Vulnerability Management

Learn what “runtime” means for vulnerability management and how runtime telemetry shows which vulnerabilities are truly exploitable, not just theoretically present.

Published on

“Runtime” has become a term security tools use without committing to a meaning. CNAPP platforms invoke it for container workload monitoring. Endpoint security applies it to behavioral threat detection. Application security uses it to describe production observation. The word appears everywhere, meaning something different each time.

For vulnerability management, we believe there is one definition of runtime that matters: observing software behavior to determine which vulnerabilities are actually exploitable.

Exploitation requires three conditions. Vulnerable code must execute. Attacker input must reach it. Compensating controls must be absent. Dependency manifests tell you what’s installed. Static scans tell you what’s written. Neither tells you which of those three conditions are met in your environment. That’s the gap runtime closes.

Runtime telemetry records what happens when code runs: which code paths execute, which system calls fire, which network connections form, which privileges activate. Not what’s configured or documented, but what actually happens on an endpoint. Behavioral evidence that separates theoretical vulnerabilities from exploitable ones.

The Data Gap in Modern Security

Security architecture evolved through specialization: EDR monitors threats, SIEM aggregates logs, SOAR automates response, CSPM audits cloud configurations, vulnerability scanners enumerate weaknesses, and threat intelligence tracks adversaries. Six tool categories, six partial pictures.

Network sensors see traffic patterns. Log aggregators see recorded events. Cloud APIs see configuration state. Scanners see installed components. None captures software behavior during execution.

The missing layer is behavioral. What does software do when it runs? Which functions execute? Which system calls fire? Which data flows where? Only runtime telemetry provides this view.

Why the Endpoint Is the Crucial Vantage Point

Most security data arrives after the fact: network telemetry captured at the perimeter, events aggregated from logs, configurations audited through APIs. Each arrives with a gap between activity and visibility.

The endpoint eliminates this delay. Code executes there. Behavior manifests there. Data captured at the endpoint arrives immediately.

More importantly, the endpoint is where behavioral context converges: which process initiated an action, what privileges it held, which connections it formed, which filesystem operations it performed. By the time data reaches network sensors or log aggregators, this context fragments. Each system records its slice; the connections between them vanish.

Exploitability assessment requires exactly this convergence. Code execution, input sources, privileges, and network access must be correlated. They align only at the execution point. The choke point where every action passes through, observable and assessable in context.

The World Runtime Was Built For

Traditional security models assume software is known, vetted, and stable. DevSecOps has strengthened these processes, but four trends are eroding the assumptions themselves.

Citizen Developer Risk

Business analysts automating reports. Marketing teams building campaign workflows. Finance constructing budget models with integrated data pulls. Each builds applications that look simple but carry security implications: workflows pulling customer data, dashboards aggregating financials, automations triggering on email.

Traditional visibility fails because vulnerability scanners require package managers and dependency manifests. These applications have none. They exist outside the observable surface security tools expect. Runtime closes this gap. It watches what executes, however that software came to exist.

AI-Generated Code Risk

The distinctive risk is velocity. Code goes from prompt to execution in seconds. No review cycle. No dependency scan. No approval workflow. An employee prompts an LLM, pastes code into a terminal, and runs it. The code may never persist, existing only in memory, then vanishing.

Traditional visibility fails because the code lives in terminal sessions and temporary files. No repository to scan. No manifest to parse. EDR sees the Python process but not what it does with data. Employees will continue using AI to generate code. The capability is too useful for policy alone to manage. Runtime provides visibility into what that code does when it runs.

Ephemeral Application Risk

Scripts, one-off automation, disposable tools built for a single purpose. The code vanishes; the effects remain. The migration script that cached credentials left them in a config file. The one-time automation modified permissions that stayed changed.

Traditional visibility fails because scanners require something to scan. By the time scheduled audits run, the code is gone. The residual risk remains invisible. Runtime observes behavior during execution, regardless of persistence. A script running for thirty seconds still makes system calls, opens connections, and modifies files. The record persists when the code does not.

Connectivity Risk

Every enterprise application connects to external services through OAuth tokens, API keys, and service accounts. Enterprises manage thousands, each a potential pathway for unauthorized access.

The MCP dimension amplifies this: Model Context Protocol standardizes how AI agents interact with external services. An AI assistant with MCP access to calendar, email, and cloud storage operates at a different scope than a single-purpose integration. The agent model multiplies what any credential set can access. Traditional visibility fails because authorization systems show what’s permitted; audit logs show what’s recorded. Neither tracks connection behavior in context. Runtime observes every external connection with process context: which application initiated it, what credentials authenticated it, and what data transferred.

The Common Thread

Four trends, one pattern: code that traditional tools cannot see, running with permissions they cannot track, connecting to services they cannot monitor. Traditional tools require something to examine: a repository, manifest, deployment record, configuration file. Runtime requires only execution. Whatever runs, however it arrived, gets observed.

What Runtime Telemetry Actually Is

Runtime telemetry records software behavior during execution: what happens when code runs, not what configuration intends.

Process execution: Which programs start, what arguments they receive, and what parent processes spawned them. A web server spawning a shell subprocess tells a different story than one handling HTTP requests.

System calls: The interface between applications and the operating system. Monitoring these reveals which applications actually use system resources.

Network activity: Which connections form, to what destinations, using which protocols. Runtime records the encryption negotiated, the data transferred, and the external services contacted.

File system operations: Which files are read, written, created, or deleted. The difference between an application with read access to /etc/shadow and one that actually reads it is the difference between theoretical and realized risk.

Privilege context: What permissions the process holds, and what user identity it runs under. Runtime distinguishes between granted and exercised privileges. Over time, these observations accumulate into behavioral fingerprints: profiles of how software operates in each deployment. Inventory draws the map; runtime watches the traffic. The map tells you roads exist. Traffic tells you which roads carry vehicles. Same infrastructure, different operational reality.

The same software in different environments produces different behavior. A critical vulnerability in one configuration may be unexploitable in another. Static analysis flags both identically. Runtime differentiates them based on what actually executes.

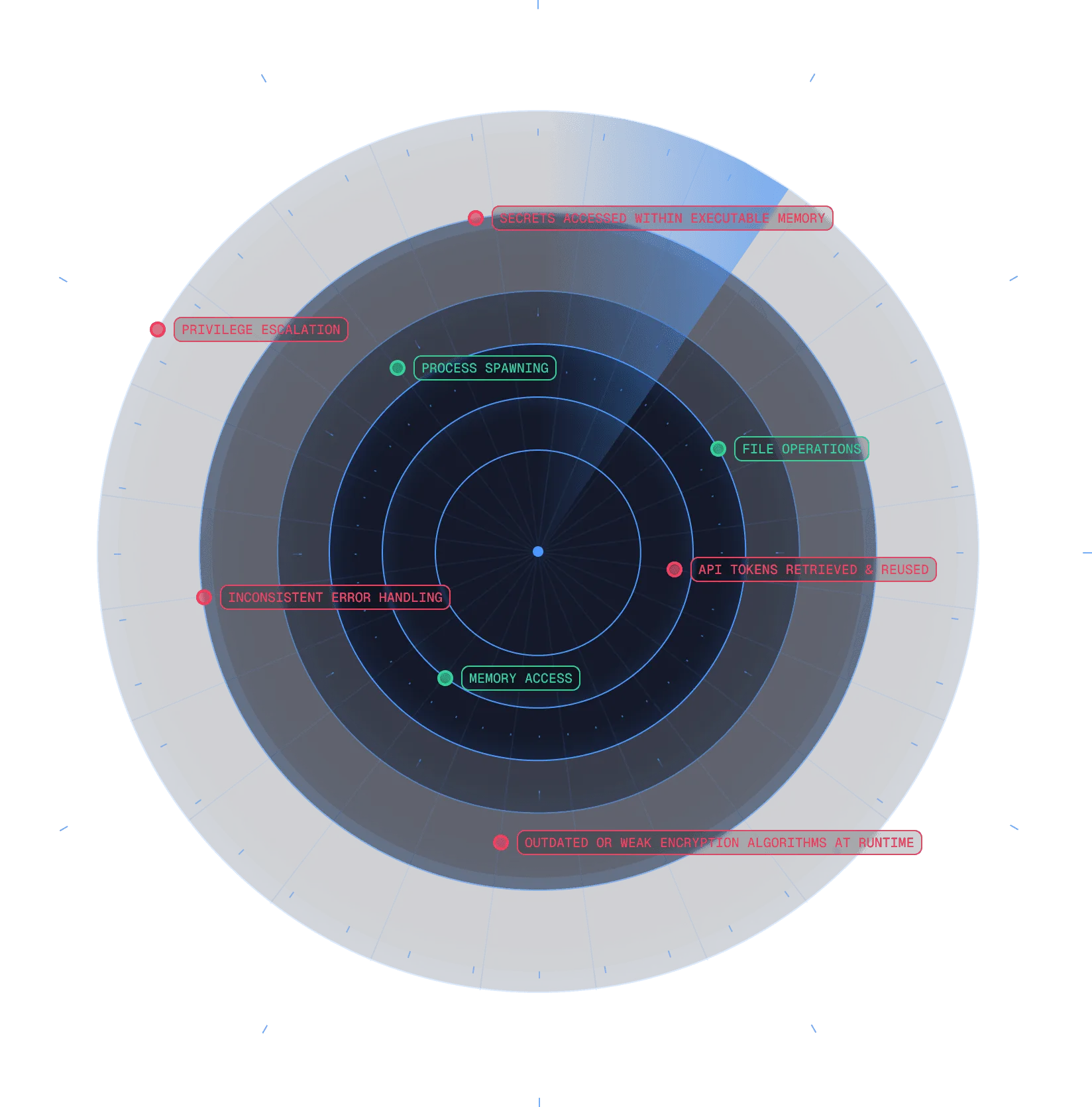

Examples of runtime insights. Green indicates normal software behavior in your environment (from baseline analysis); red indicates anomalous behavior. Spektion reveals runtime insights so you can take action.

What Runtime Observation Makes Possible

Coverage beyond CVE databases. Much enterprise software, including custom applications, internal tools, and citizen-developed workflows, exists outside CVE databases. Runtime tracks behavior regardless of CVE coverage.

Earlier warning than publication timelines. CVE databases document known vulnerabilities. Runtime monitors behavior patterns. Execution exhibiting risky characteristics provides signals independent of whether a CVE exists.

Compounding intelligence. Behavioral baselines grow more precise over time. Week one establishes broad norms. Month one reveals timing patterns. The more precisely we understand normal, the more reliably we identify abnormal.

As behavioral data accumulates, more targeted prioritization (not “this CVE exists” but “deployments with this behavioral profile have historically been exploited”), specific remediation guidance (not “patch this library” but “disable this code path”), and earlier warning signals as patterns that preceded exploitation become detectable.

The Paradigm Shift: From Inventory to Behavior

For decades, security operated on an inventory model: catalog assets, enumerate dependencies, match installed components against vulnerability databases. This served well when software was known, vetted, and stable.

That model is expanding. Citizen developers build without security review. AI generates code that never enters repositories. Applications connect through APIs and MCPs at scale. The attack surface grows faster than inventory systems can track.

Runtime observation represents what this new landscape requires. Not instead of inventory. Alongside it.

Inventory asks: What do we have? Behavior asks: What is happening?

Inventory catalogs components. Behavior watches execution.

Inventory infers risk from presence. Behavior measures risk from activity.

That’s why we started with a definition. “Runtime” has become a word vendors use to signal modernity without committing to meaning. Same word, different capabilities, no clarity about which one solves which problem.

For vulnerability management, one runtime definition matters: observing software behavior to determine which vulnerabilities are actually exploitable. Behavioral telemetry at the endpoint that answers whether vulnerable code executes, whether attacker input can reach it, and whether compensating controls are in place. That’s the runtime that turns thousands of findings into a prioritized list you can act on. That’s what we built.